What Is LSTM?

A long short-term memory network is a type of recurrent neural network (RNN). LSTMs are predominantly used to learn, process, and classify sequential data because these networks can learn long-term dependencies between time steps of data. Common LSTM applications include sentiment analysis, language modeling, speech recognition, and video analysis.

LSTM Applications and Examples

The examples below use MATLAB® and Deep Learning Toolbox™ to apply LSTM in specific applications. Beginners can get started with LSTM networks through this simple example: Time Series Forecasting Using LSTMs.

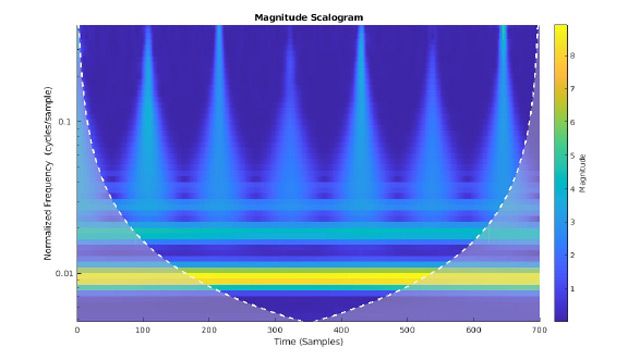

Radar Target Classification

Classify radar returns using a Long Short-Term Memory (LSTM) recurrent neural network in MATLAB

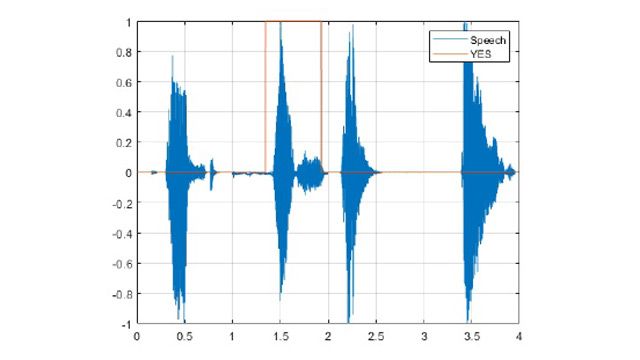

Keyword Spotting

Wake up a system when a user speaks a predefined keyword

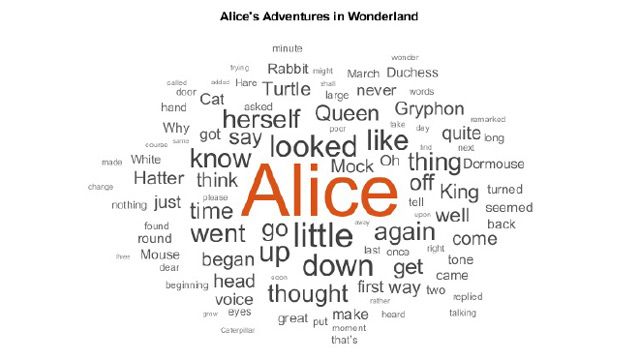

Text Generation

Train a deep learning LSTM network to generate text word-by-word

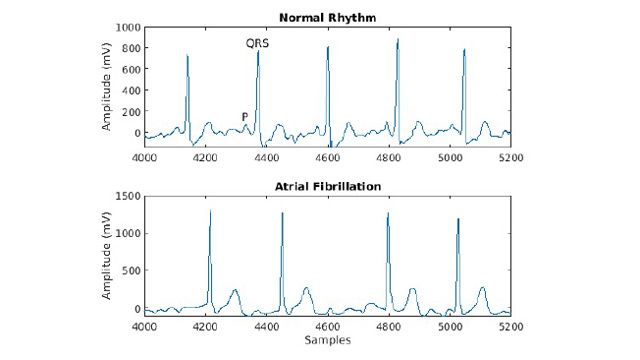

Classifying ECG Signals

Categorize ECG signals, which record the electrical activity of a person's heart over time, as Normal or AFib

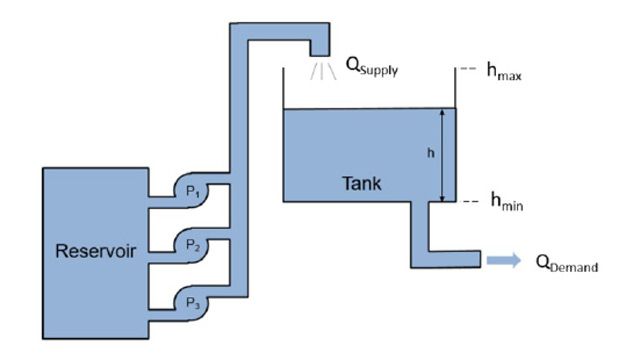

Water Distribution System Scheduling

Generate an optimal pump scheduling policy for a water distribution system using reinforcement learning (RL)

Video Classification

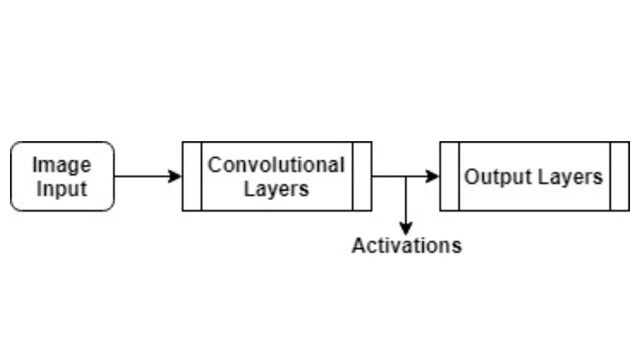

Classify video by combining a pretrained image classification model and an LSTM network

Technical Features of RNN and LSTM

LSTM networks are a specialized form of RNN architecture. The differences between the architectures and the advantages of LSTMs are highlighted in this section.

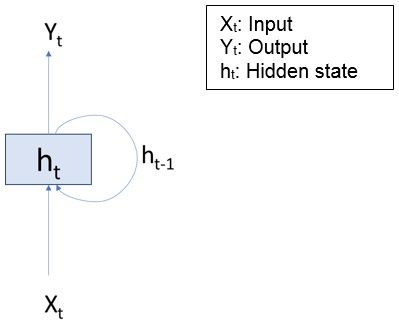

Recurrent neural network.

Basic structure of recurrent neural network (RNN).

In practice, simple RNNs are limited in their capacity to learn longer-term dependencies. RNNs are commonly trained through backpropagation, in which they may experience either a ‘vanishing’ or ‘exploding’ gradient problem. These problems cause the network weights to either become very small or very large, limiting effectiveness in applications that require the network to learn long-term relationships.

To overcome this issue, LSTM networks use additional gates to control what information in the hidden cell is exported as output and to the next hidden state . The additional gates allow the network to learn long-term relationships in the data more effectively. Lower sensitivity to the time gap makes LSTM networks better for analyzing sequential data than simple RNNs.

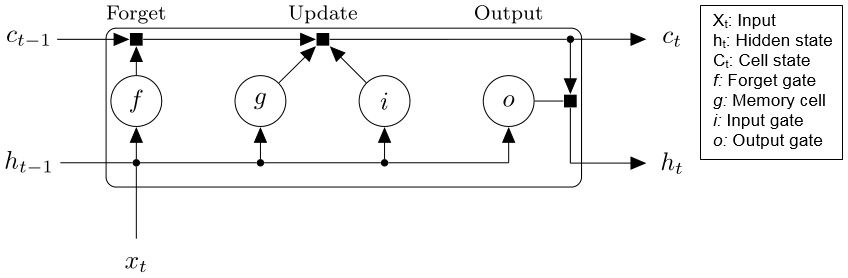

In addition to the hidden state in traditional RNNs, the architecture for an LSTM block typically has a memory cell, input gate, output gate, and forget gate, as shown below.

In comparison to RNN, long short-term memory (LSTM) architecture has more gates to control information flow.

The weights and biases to the input gate control the extent to which a new value flows into the cell. Similarly, the weights and biases to the forget gate and output gate control the extent to which a value remains in the cell and the extent to which the value in the cell is used to compute the output activation of the LSTM block, respectively.

For more details on the LSTM network, see Deep Learning Toolbox™.

Software Reference

See also: MATLAB for deep learning, machine learning, MATLAB for data science, GPU computing, artificial intelligence